Sensor enables high-fidelity input from everyday objects, human body

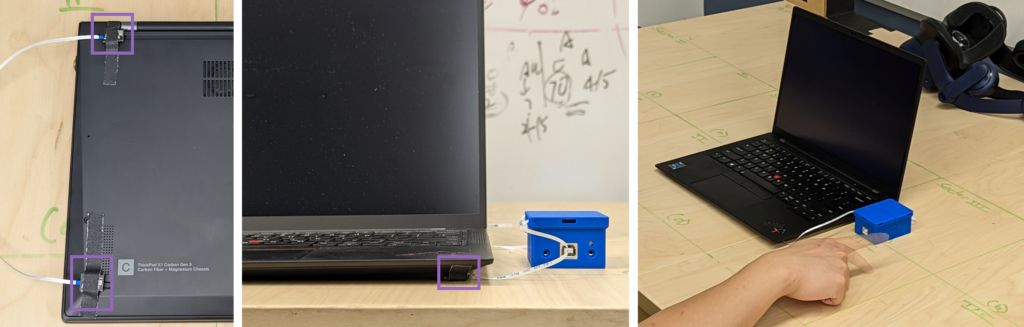

A new sensing system has been developed at the University of Michigan that can turn nearly any surface into a high-fidelity input device for computers, from couches and tables to sleeves and the human body.

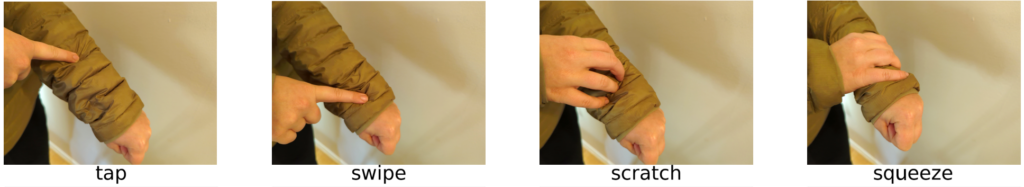

Called SAWSense, the system repurposes technology from new bone-conduction microphones to detect only those acoustic waves that travel along the surface of objects. It works in noisy environments, along odd geometries such as toys and arms, and on soft fabrics such as clothing and furniture. It recognizes different inputs, such as taps, scratches, and swipes, with 97% accuracy and no need for touch screens, pads, cameras, or other common input electronics. This has enabled the researchers to demonstrate things such as using a normal table to replace a laptop’s trackpad.

“This technology will enable you to treat, for example, the whole surface of your body like an interactive surface,” says Yasha Iravantchi, doctoral candidate in computer science and engineering and lead author. “If you put the device on your wrist, you can do gestures on your own skin. We have preliminary findings that demonstrate this is entirely feasible.”

The sensor takes advantage of a phenomenon called surface-acoustic waves to provide any computing system with reliable data about how and when an object is being touched. Distinct from vibrations, these waves are actual acoustic waves that travel along the surface of materials. These waves are then classified with machine learning to turn all touch into a robust set of inputs. The system was presented on April 24 at the 2023 Conference on Human Factors in Computing Systems (CHI ’23), where it received a Best Paper Award.

As more objects continue to incorporate smart or connected technology, designers are faced with a number of challenges when trying to give them intuitive input mechanisms. This results in a lot of clunky incorporation of input methods such as touch screens, or the need to connect a device directly to a computer or network in order to interact with it in between uses.

Past attemps to overcome these limitations have relied on approaches such as the use of microphones and cameras for audio- and gesture-based inputs, but, the authors say, techniques like these have limited practicality in the real world.

“When there’s a lot of background noise, or something comes between the user and the camera, audio and visual gesture inputs don’t work well,” says Iravantchi.

The sensors powering SAWSense, called Voice PickUp units (VPUs), overcome these limitations with a number of unique design traits. The sensor itself is housed in a hermetically sealed chamber that completely blocks even very loud ambient noise. The only entryway is through a mass-spring system that conducts the surface-acoustic waves inside the housing without ever coming in contact with sounds in the surrounding environment.

This results in a sensor that can perfectly record the events along an object’s surface, and only those events.

“There are other ways you could detect vibrations or surface-acoustic waves, like piezo-electric sensors or accelerometers,” says Alanson Sample, an associate professor of electrical engineering and computer science, “but they don’t have the frequency bandwidth to capture the broad range of signals we’re interested in. This sensor allows us to capture surface-acoustic waves, reject external waves, and get a lot of interesting data.”

This high bandwidth is what powers SAWSense’s interaction recognition capabilities. The difference between a swipe and a scratch is subtle, and robust audio data is necessary to detect it reliably. This fidelity allows SAWSense to act as a surface sensor for other settings as well, such as a kitchen countertop that can identify when it’s being used to chop, stir, blend, or whisk.

The reliance on surface-acoustic waves for these types of in-home smart technologies offers an appealing privacy-sensitive alternative to traditional methods reliant on cameras and standard microphones. These waves are entirely bound to a single object – placing two tables close to each other, SAWSense would only be able to detect contact with the table housing the VPU.

“VPUs do a good job of sensing activities and events happening in a well-defined area,” says Iravantchi. “This allows the functionality that comes with a smart object without the privacy concerns of a standard microphone that senses the whole room, for example.”

When multiple VPUs are used in combination, SAWSense could enable more specific and sensitive inputs, especially those that require a sense of space and distance like the keys on a keyboard or buttons on a remote.

“There’s a long tradition of using arrays to localize things – like arrays of antennas,” says Sample. “The next step is using multiple VPUs to localize surface-acoustic waves. You can also combine them with other modalities like vision, or a language model, to correct input errors.”

The researchers are also exploring the use of VPUs for medical sensing, including picking up delicate noises such as the sounds of joints and connective tissues as they move. The high-fidelity audio data VPUs provide could power just-in-time analytics about a person’s health, Sample says.

The research is partially funded by Meta Platforms, Inc.

Study: SAWSense: Using Surface Acoustic Waves for Surface-bound Event Recognition

Project Website: https://theisclab.com/projects/SAW-Sense/SAW-Sense.html

MENU

MENU